Whoever said the human brain is the most highly organized collection of matter in the universe was more correct than they could have known. New research modeled tiny structures within nerve cells and discovered a clever tactic brains use to increase computing power while maximizing energy efficiency. Its design could form the basis of a whole new and improved class of computer.

Neurobiologists from the Salk Institute of La Jolla, California, and the University of Texas, Austin, collaborated to build 3-D computer models that mimic tiny sections of rat hippocampus—a brain region in mammals where neurons constantly process and store memories. One of the models, published in the biological journal eLife, helped reveal that the sizes of synapses change within minutes.1

Synapses occur at junctions between nerve cells, like two people holding hands. Each cell can have a thousand "hands" contacting as many neighbors to form a dizzying 3-D array with billions of connections and pathways. Each junction transfers information between cells by passing along tiny chemicals called neurotransmitters.

Groundbreaking imaging published in 2011 revealed many more of these nerve-to-nerve connections than ever imagined, prompting comparisons between the human brain and the number of switches in all the computers and internet connections on Earth.2 It turns out that the sizes of these connection points, called synapses, shifts with use or disuse—a process called synaptic plasticity. Synapses strengthen when learning occurs or weaken when unused.

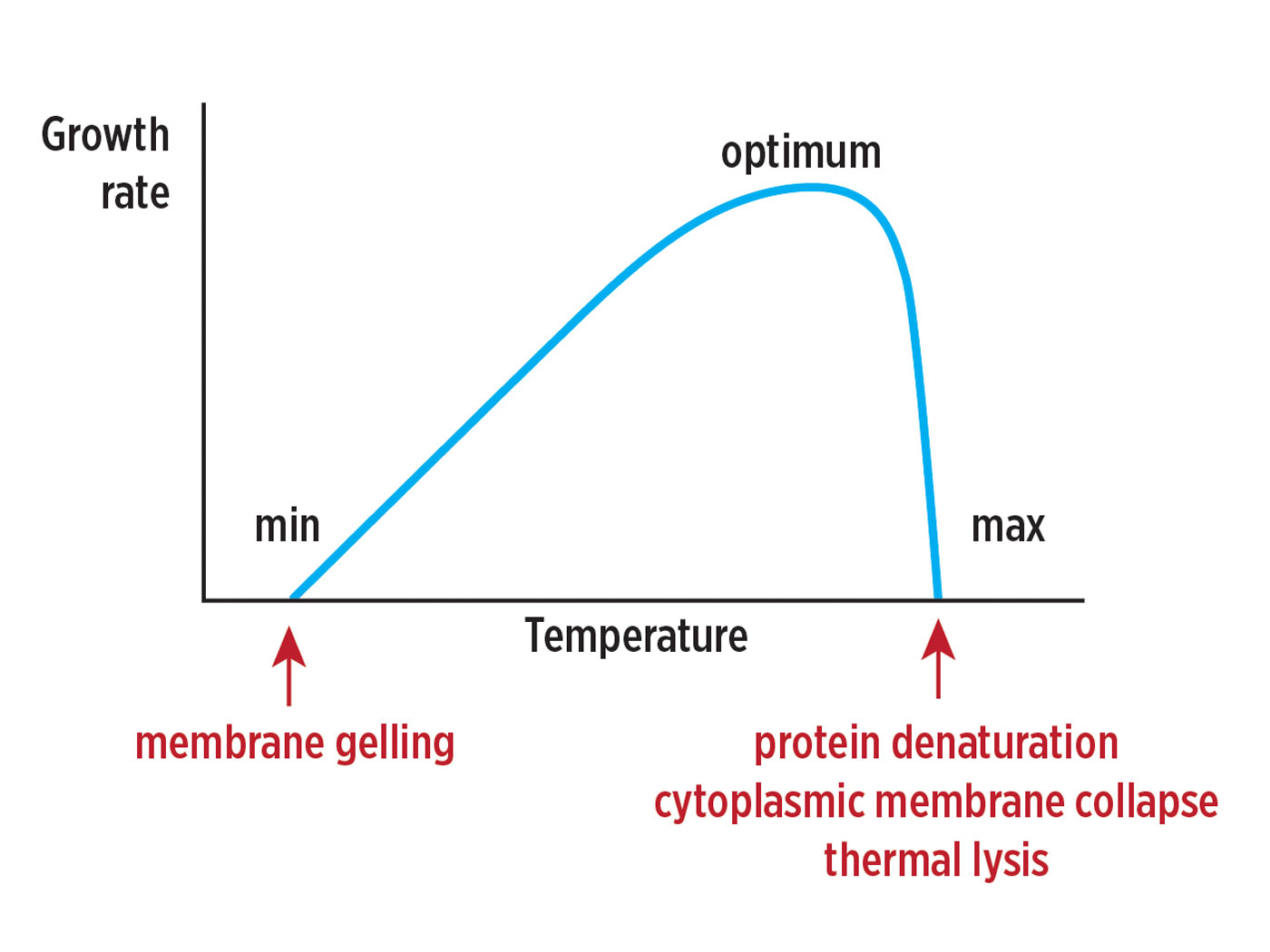

How do brains do it? They store and transmit information not with the simplistic 0s and 1s of computer code, but with degrees of synapse strength. In other words, they don't transfer information with a single input spike, but recognize 26 distinguishably different levels of synaptic strength. Authors of the new research looked for possible advantages to this complicated molecular variability. They wrote that synapses "might reflect a sampling strategy designed for energetic efficiency."1 Nerve cells use the size and stability of each synapse to process and record information such as memories.

Separate research, published in Nature Communications, found that biochemical communication inside each synapse constantly monitors and adjusts synaptic plasticity.3 This "plasticity-enabling mechanism" includes positive feedback loops and a safety mechanism to prevent cell death, according to a research summary article published in Nature by Christine Gee and Thomas Oertner of the Center of Molecular Neurobiology Hamburg.4

Terry Sejnowski, co-senior author of the eLife study, told the Salk Institute,

We discovered the key to unlocking the design principle for how hippocampal neurons function with low energy but high computation power. Our new measurements of the brain's memory capacity increase conservative estimates by a factor of 10 to at least a petabyte, in the same ballpark as the World Wide Web.5

What's a petabyte? 8,000,000,000,000,000 bits of information. The mind-boggling levels of organization and necessary regulatory protocols in synapses refute all notions that brains evolved from single cells through merely natural processes. The strategies, algorithms, and design principles brains employ could only have come from an otherworldly Architect whose genius mankind can only dream of copying.6

References

- Bartol Jr., T. M. et al. 2015. Nanoconnectomic upper bound on the variability of synaptic plasticity. eLife. 10.7554/eLife.10778.

- Thomas, B. Brain's Complexity 'Is Beyond Anything Imagined'. Creation Science Update. Posted on icr.org January 17, 2011, accessed January 20, 2016.

- Tigaret, C. M. et al. 2016. Coordinated activation of distinct Ca2+ sources and metabotropic glutamate receptors encodes Hebbian synaptic plasticity. Nature Communications. 7: 10289.

- Gee, C. E. and T. G. Oertner, 2016. Pull out the stops for plasticity. Nature. 529 (7585): 164-165.

- Memory capacity of brain is 10 times more than previously thought. Salk News. Posted on salk.edu January 20, 2016, accessed January 20, 2016.

- "The new work also answers a longstanding question as to how the brain is so energy efficient and could help engineers build computers that are incredibly powerful but also conserve energy" (Salk News).

*Mr. Thomas is Science Writer at the Institute for Creation Research.

Article posted on February 4, 2016.